How to navigate the AI apocalypse as a sane person

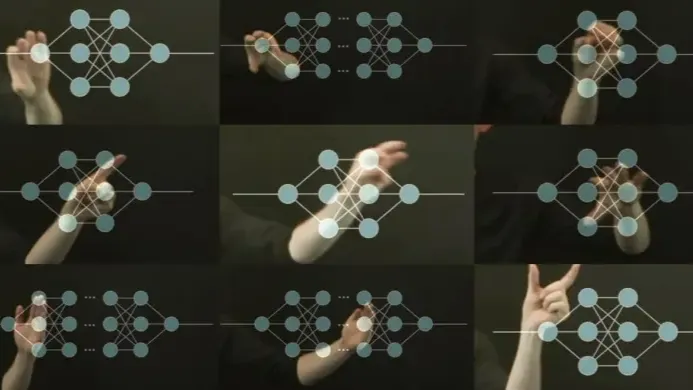

How much can you optimize for generality? To what extent can you simultaneously optimize a system for every possible situation, including situations never encountered before? Presumably, some improvement is possible, but the idea of an intelligence explosion implies that there is essentially no limit to the extent of optimization that can be achiev... See more

newyorker.com • Why Computers Won’t Make Themselves Smarter

In his 2014 book, Superintelligence, the philosopher Nick Bostrom illustrated the danger using a thought experiment, which is reminiscent of Goethe’s “Sorcerer’s Apprentice.” Bostrom asks us to imagine that a paper-clip factory buys a superintelligent computer and that the factory’s human manager gives the computer a seemingly simple task: produce

... See moreYuval Noah Harari • Nexus: A Brief History of Information Networks from the Stone Age to AI

Vrais et faux risques de l’intelligence artificielle

linkedin.com

The assumption that superintelligences can somehow simulate reality to arbitrary degrees of precision runs counter to what we know about thermodynamics, computational irreducibility, and information theory.

A lot of the narratives seem to assume that a superintelligence will somehow free itself from constraints like „cost of compute“, „cost of stori... See more

A lot of the narratives seem to assume that a superintelligence will somehow free itself from constraints like „cost of compute“, „cost of stori... See more