GitHub - varunshenoy/super-json-mode: Low latency JSON generation using LLMs ⚡️

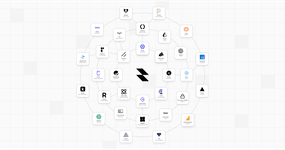

GitHub - haydenbleasel/next-forge: A production-grade boilerplate for modern Next.js apps.

github.com

WebDataset

WebDataset is a library for writing I/O pipelines for large datasets. Its sequential I/O and sharding features make it especially useful for streaming large-scale datasets to a DataLoader.

The WebDataset format

A WebDataset file is a TAR archive containing a series of data files. All successive data files with the same prefix are consider... See more

WebDataset is a library for writing I/O pipelines for large datasets. Its sequential I/O and sharding features make it especially useful for streaming large-scale datasets to a DataLoader.

The WebDataset format

A WebDataset file is a TAR archive containing a series of data files. All successive data files with the same prefix are consider... See more

WebDataset

DiscoLM German 7B v1 - GGUF

Model creator: Disco Research

Original model: DiscoLM German 7B v1

Description

This repo contains GGUF format model files for Disco Research's DiscoLM German 7B v1.

These files were quantised using hardware kindly provided by Massed Compute.

About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. I... See more

Model creator: Disco Research

Original model: DiscoLM German 7B v1

Description

This repo contains GGUF format model files for Disco Research's DiscoLM German 7B v1.

These files were quantised using hardware kindly provided by Massed Compute.

About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. I... See more