Sublime

An inspiration engine for ideas

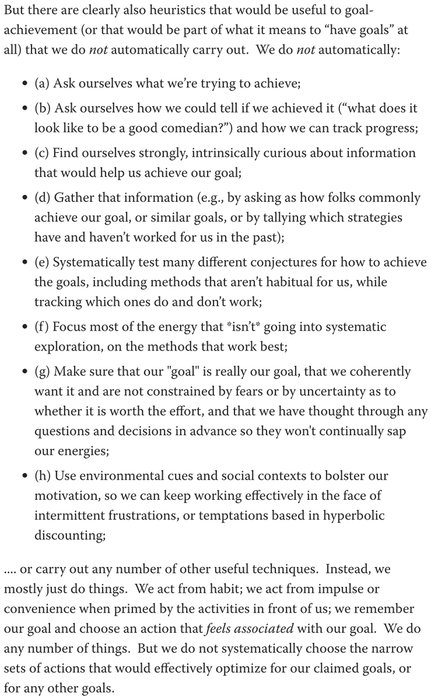

A gem form the LessWrong community: 'Humans are not automatically strategic.'

"A large majority of otherwise smart people spend time doing semi-productive things, when there are massively productive opportunities untapped." https://t.co/5eR7t5YXLN

Superintelligence: Paths, Dangers, Strategies

amazon.com

Here's my conversation with Roman Yampolskiy (@romanyam), AI safety researcher who believes that the chance of AGI eventually destroying human civilization is 99.9999%.

I will continue to chat with many AI researchers & engineers, most of whom put p(doom) at <20%, but it's important to balance those technical conversati... See more

Lex Fridmanx.comIn his 2014 book, Superintelligence, the philosopher Nick Bostrom illustrated the danger using a thought experiment, which is reminiscent of Goethe’s “Sorcerer’s Apprentice.” Bostrom asks us to imagine that a paper-clip factory buys a superintelligent computer and that the factory’s human manager gives the computer a seemingly simple task: produce

... See more